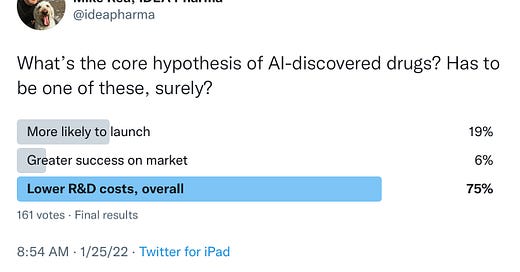

I posted a poll on Twitter this week, asking a genuine question: what is the core idea of AI-based Discovery, given the huge number of deals and dollars going into that space at the moment?

A nice debate broke out under the poll, following a couple of hypotheses down. However, this longer post was promised, to allow a longer-form discussion to take place (Twitter may be great for speed, but 280 characters does tend to remove nuance…).

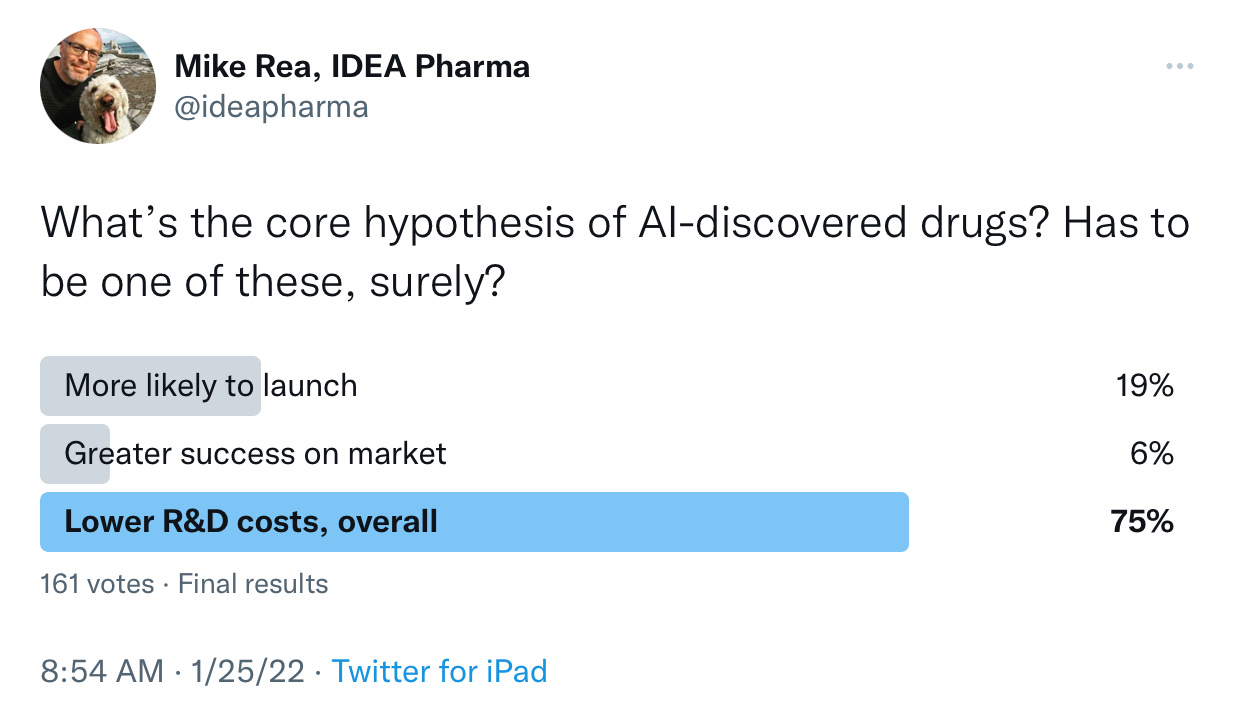

Key to my question is this: once those assets hit the pipeline, do they behave any differently than ‘conventionally discovered’ drugs? Once they’re in the pipeline jar, and you, blindfolded, pull one out, how can you tell the difference…? (Excuse my drawing - I did this one myself…)

One thread can be summarised as: yes, it is a lot easier, faster, cheaper to generate balls for the jar this way. However, that didn’t seem to lead to any greater expectation of success beyond that point.

For me, that is an argument that goes like this: because Development is attritional, it is better to put as many balls in the jar as possible - quantity is more important… I don’t buy this argument. As I’ve written before, pharma’s problem is not starvation, it is indigestion. If those drugs aren’t more likely to launch, or are not better targeted to an unmet market need, the benefits solely accrue to a Discovery organisation incentivised for volume, not to the Development organisation. Development is far more costly than Discovery, and increasing the candidate pool for Development doesn’t make that easier.

Another version of that argument: it enables faster ‘kill’ in early stage. Again, an odd perspective. The ‘kill’ is still provided by conventional hurdles - screening for safety, druggability, etc. Killing drugs early only makes sense if it brings forward bad news that would happen later anyway. We’re not seeing pharma (in general) focus on finding out bad news early - it’s often leaving it to Phase III or to the market to kill its drugs… Failure for Business Plan is the biggest rate-limiting step in Development. ‘Killing early’ rather ignores what early stage should be for - ‘killing’ a drug by planting it in the wrong field is a waste of everyone’s time and seed.

So, where does ‘lowers the cost of R&D’ come from? I’m yet to hear a persuasive argument for exactly where one might see that saving. I am open to it being from some version of ‘improves probability of success’ in Development, especially if that is in later stage, but there’s an absence of evidence for that. (I am aware that we don’t have a lot of evidence in either direction, and that every year is bringing improvements in technology.)

One thread which was more persuasive is that we might see drugs being generated that we couldn’t have expected from the conventional approaches - different pharmacophores, non-obvious directions. Of course, the hurdles remain: synthesis, and other conventional Discovery steps are not sidestepped in that hypothesis. In the presence of greater diversity, that could increase our chances of success, but it somewhat ignores the sources of remarkable target development (nature, and evolution, remains a very healthy pool for candidates that have already passed some of those hurdles). There is also a rather massive pile of drugs that have been through phase I but not taken forward for business reasons.

I worry that this perspective is a version of the first: if the incentives for quantity of candidates remain focused on early-stage metrics, including IP-based metrics, we should acknowledge that - patenting ‘what ifs’ and novel targets may well be financially exciting, even if those drugs end up going nowhere.

There is a proposition, played out in press releases: the ability to focus Discovery down into areas that Development have already prioritised. ‘I want a candidate that will target Disease x’ happens a lot, and it may be easier to tell a smart computer to give you one than your Discovery colleagues.

So, I am not yet arriving at a conclusion. Many folks smarter than I are heavily invested in AI-based Discovery. I may believe that AI is better pointed at Development decisions (that’s why we started Protodigm, after all…). However, there’s great muddiness in what people are hoping to see - it seems clear that most of the excitement is not in the expectation that we’ll see more of those drugs launching successfully, but in the bubble of money that surrounds early stage.

I thought I’d hear a clear argument - and I continue to apply my ‘keep it simple’ rule, because it is easy to get dragged into sexy science, or computer science. I’d love to hear clear purpose, explained using my jar analogy, or something similar. As far as I know, Substack/ LinkedIn don’t have limits on word-count, so please do educate me below…

This is very insightful, Mike. There is so much exhuberance about what I call “single node innovations” that get excited about one step but forget everything else in the system and lose sight of the big picture. Thank you!

Hi Mike. I think the economic argument is that if it costs, say, $30m* to deliver one single Development Candidate (DC) and you need 20 of these to go through the Development process to generate 1 NME (i.e. assuming a 5% success rate) then Discovery is costing $600m per NME. If AI is genuinely helping to reduce the $30m/DC cost then it can, in theory, have a meaningful effect on the cost of the end-to-end process. But I’m not sure we know if that is genuinely the case yet.